Microsoft released the first release candidate of Internet Explorer 9. Amongst other features, the latest incarnation of Microsoft’s browser now includes support for the W3C Geolocation API. What this basically means is that (if you allow them to do so), any websites you visit on the internet will be able to determine your current real-world location as you are browsing, and potentially update their content accordingly. Location-aware applications are already well-established in the mobile application market (with apps like foursquare and location-enabled twitter clients proving popular) but they are still relatively scarce in the traditional web application domain.

The geolocation API has been around for a while, and I played around with it a bit last summer. Last time I looked at it, I decided it was only of limited practical value, mainly for the following reasons:

- Firstly, there was very limited support among mainstream desktop browsers.

- Secondly, for reasons of security, users have to “opt-in” to share their location. This is generally presented as a fairly ugly security warning in the browser, and rather destroys any attempts to seamlessly adapt content for the user’s location.

- The implementations that did exist seemed buggy or very slow.

With the support for geolocation now added in IE9 (and already present in Firefox, Chrome, and Safari amongst others), at least the first one of these points seems no longer valid. So, I thought it was time to revisit the geolocation API.

A webpage that plots the user’s location

on a Bing Map

Fortunately, the Geolocation API is simple and relatively well-documented, so I threw together a quick webpage that displays a Bing Map and (assuming you agree to share your location) centres it on your current location, as reported by your browser.

Here’s the code:

[sourcecode language=”plain”]<!DOCTYPE HTML PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "<a href="http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"">http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"</a>>

<html xmlns="<a href="http://www.w3.org/1999/xhtml"">http://www.w3.org/1999/xhtml"</a>>

<head>

<title></title>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

<script type="text/javascript" src="<a href="http://ecn.dev.virtualearth.net/mapcontrol/mapcontrol.ashx?v=7.0"">http://ecn.dev.virtualearth.net/mapcontrol/mapcontrol.ashx?v=7.0"</a>></script>

<script type="text/javascript">

var map = null;

function GetMap() {

// Initialise the map

map = new Microsoft.Maps.Map(document.getElementById("mapDiv"),

{ credentials: "YOURBINGMAPSKEY",

center: new Microsoft.Maps.Location(54, -4),

zoom: 6

});

// Check if the browser supports geolocation

if (navigator.geolocation) {

// Request the user’s location

navigator.geolocation.getCurrentPosition(locateSuccess, locateError, { maximumAge: 0, timeout: 30000, enableHighAccuracy: true });

}

else {

window.status = "Geolocation is not supported on this browser.";

}

}

/*

* Handle a succesful geolocation

*/

function locateSuccess(loc) {

var accuracy = loc.coords.accuracy;

// Determine the best zoom level

var zoomLevel = 14;

if (accuracy > 500)

{ zoomLevel = 11; }

else if (accuracy > 100)

{ zoomLevel = 14; }

else if (accuracy <= 100)

{ zoomLevel = 17; }

// Set the user’s location

var userLocation = new Microsoft.Maps.Location(loc.coords.latitude, loc.coords.longitude);

// Change the map view

map.setView({ center: userLocation, zoom: zoomLevel });

// Plot a circle to show the area in which the user is located

var locationArea = plotCircle(userLocation, accuracy);

map.entities.push(locationArea);

}

/*

* Handle any errors from the geolocation request

*/

function locateError(geoPositionError) {

switch (geoPositionError.code) {

case 0: // UNKNOWN_ERROR

alert(‘An unknown error occurred’);

break;

case 1: // PERMISSION_DENIED

alert(‘Permission to use Geolocation API denied’);

break;

case 2: // POSITION_UNAVAILABLE

alert(‘Could not determine location’);

break;

case 3: // TIMEOUT

alert(‘The geolocation request timed out’);

break;

default:

}

}

/*

* Plot a circle of a given radius about a point

*/

function plotCircle(loc, radius) {

var R = 6378137;

var lat = (loc.latitude * Math.PI) / 180;

var lon = (loc.longitude * Math.PI) / 180;

var d = parseFloat(radius) / R;

var locs = new Array();

for (x = 0; x <= 360; x++) {

var p = new Microsoft.Maps.Location();

brng = x * Math.PI / 180;

p.latitude = Math.asin(Math.sin(lat) * Math.cos(d) + Math.cos(lat) * Math.sin(d) * Math.cos(brng));

p.longitude = ((lon + Math.atan2(Math.sin(brng) * Math.sin(d) * Math.cos(lat), Math.cos(d) – Math.sin(lat) * Math.sin(p.latitude))) * 180) / Math.PI;

p.latitude = (p.latitude * 180) / Math.PI;

locs.push(p);

}

return new Microsoft.Maps.Polygon(locs, { fillColor: new Microsoft.Maps.Color(128, 0, 0, 255), strokeColor: new Microsoft.Maps.Color(192, 0, 0, 255) });

}

</script>

</head>

<body onload="GetMap();">

<p>Remember to <q>Share Location</q> when prompted to allow your browser to use the geolocation API.</p>

<div id=’mapDiv’ style="position: relative; width: 640px; height: 480px;"></div>

</body>

</html> [/sourcecode]

Comparing Browser Results

The results were… interesting. I loaded the page above in three browsers, all running under Windows 7 64-bit:

- Microsoft IE9 (RC1)

- Firefox (3.6.13)

- Google Chrome (9.0.597.98)

Firstly, I tested them on my desktop computer which has a wired connection to my broadband router (this is important!).

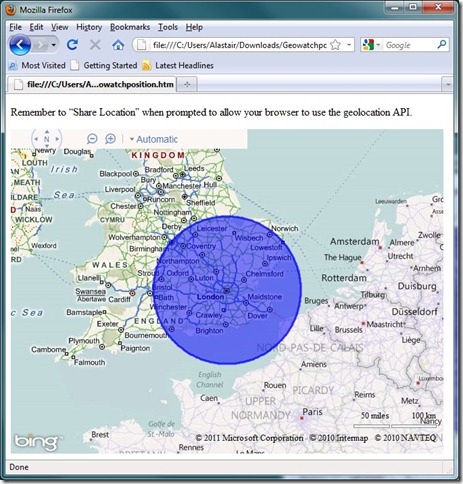

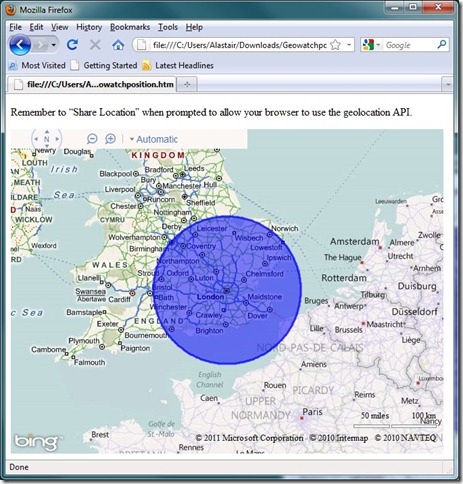

Firefox – Wired Connection

Firefox was the first to be tested, and it successfully returned a geolocation result, although the location accuracy was fairly abysmal – placing me somewhere in a circle of radius about 100 miles around London. I’m in Norwich, which is just inside the Northeast extent of the circle, so technically the geolocation was correct within the bounds of its own stated accuracy, but hardly convincing.

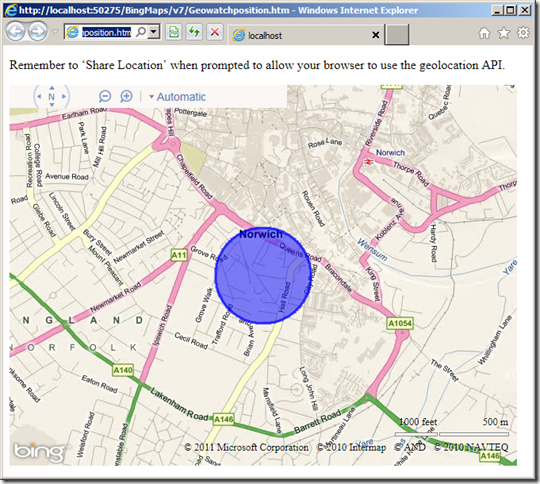

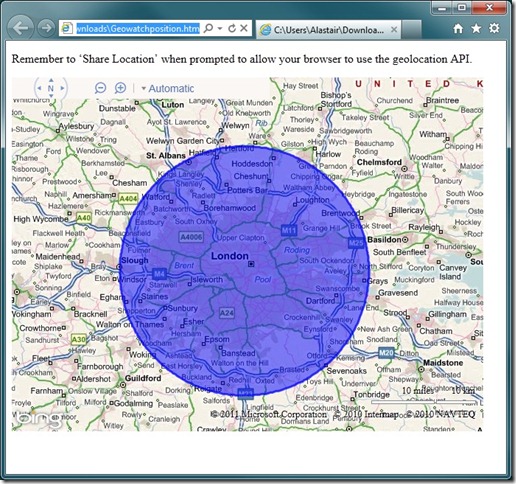

Internet Explorer 9 – Wired Connection

IE9 was next, and the result was returned with much greater accuracy, although, as far as I’m concerned, was less correct than the previous result from Firefox. The geolocation result was now shown with an accuracy of about 20 miles around London – a smaller area, although crucially one that no longer included my actual current location!

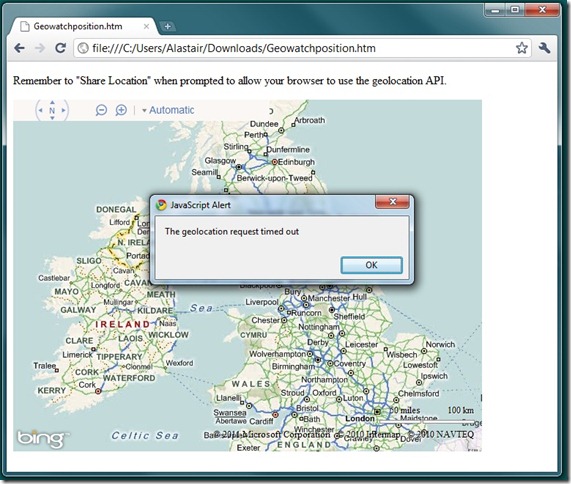

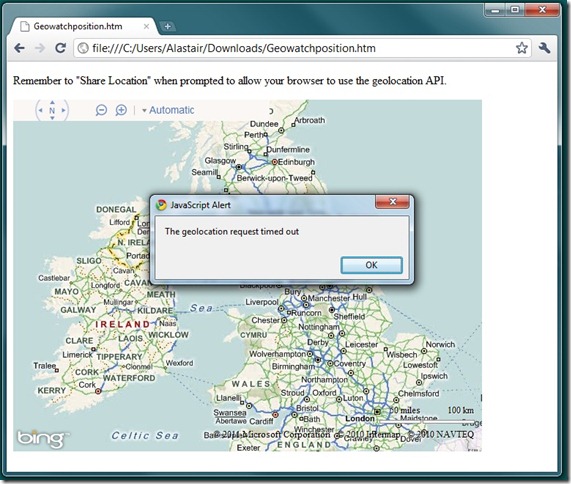

Chrome – Wired Connection

Just when I thought this couldn’t get any worse, I loaded the page in Google Chrome, only to be greeted by a timeout error. I’d set the timeout in my script to 30 seconds, which already seemed way too long for any kind of decent user-experience, but Chrome didn’t even get a result within that time. Oh dear.

Now, at this point it’s worth thinking about how the geolocation API obtains your current location. On a mobile device, it’s pretty easy – most modern smartphones have inbuilt GPS that give very accurate location readings or, failing that, you can obtain a reasonable estimate from triangulating your location from the set of network towers from which your phone can currently receive signal.

On a computer, the geolocation API relies upon either your IP address, or the known locations of any wireless networks to which you are connected. My broadband provider is a national network, based in London, so if you trace my IP address you’ll likely get the best geolocation match somewhere in London. That explains the IE9 and FF results above. So what if I repeat the test but on my laptop, still connected to my own home router, but this time via wi-fi?

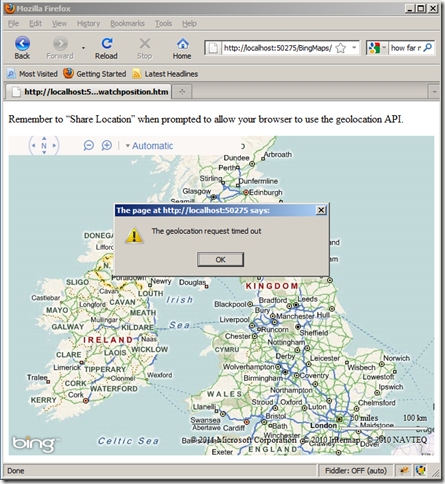

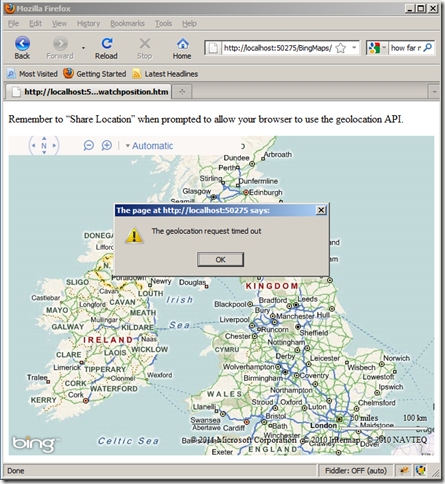

Firefox – Wireless Connection

Oh for Pete’s sake – now Firefox is timing out! Maybe this whole thing was a bad idea. I can barely bring myself to test IE9, it’s bound to be worse…

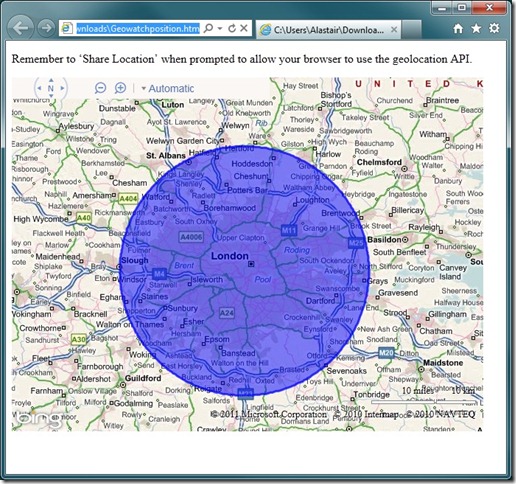

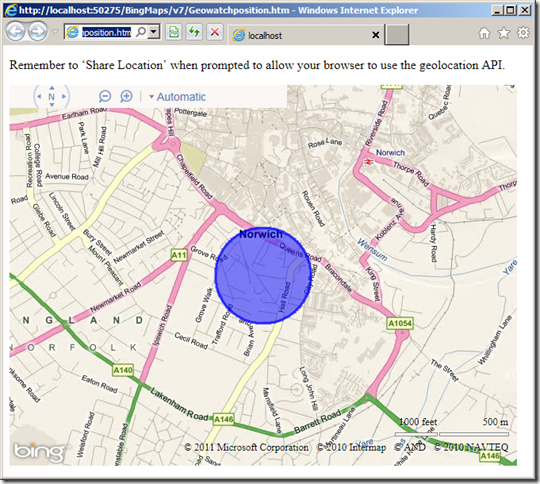

Internet Explorer – Wireless Connection

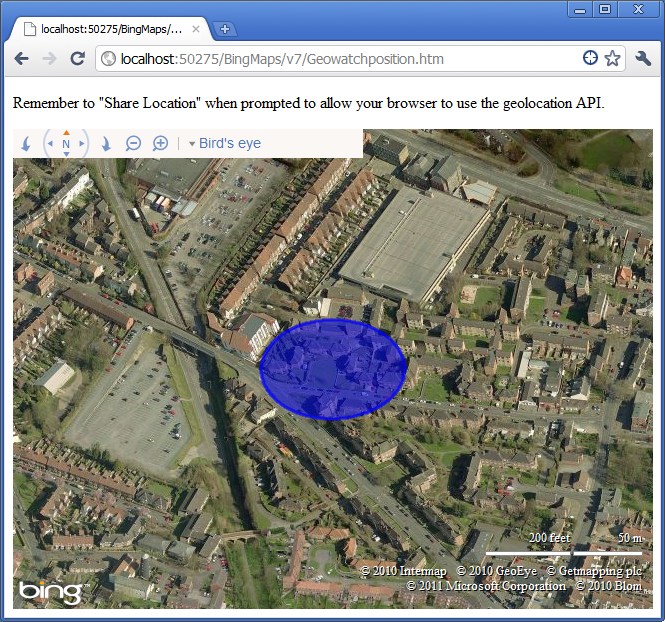

…oh, but wait! Finally things are looking up! IE9 successfully geolocates in well under a second and , what’s more, the result is a geolocation that is accurate to under 500 metres, and really does include my current location. Finally, this is something that could actually be sensibly used to create location-specific services for me.

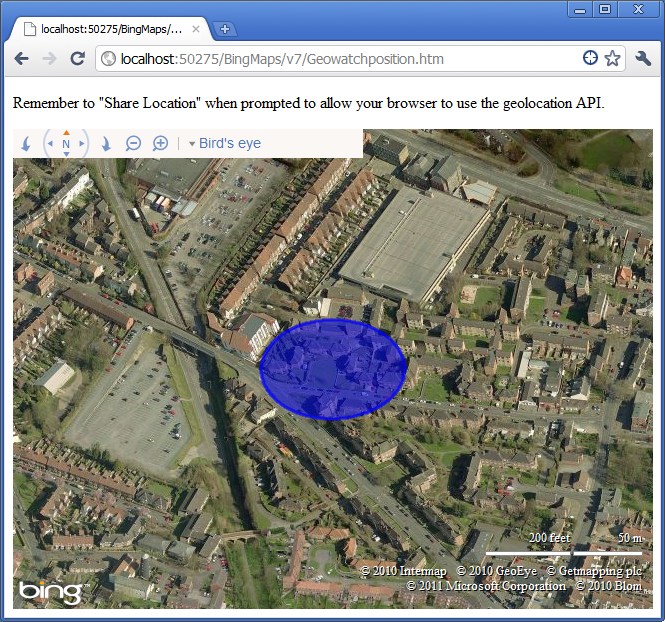

Chrome – Wireless Connection

Holy smokes! The Chrome result, when used over a wireless network is worthy of note for two reasons. Firstly, it is lightning fast – loading the page and zooming the map in milliseconds. Secondly, that really is where I am right now, accurate to within about 30 metres. And remember I’ve not got any special hardware or anything here – I’m connecting to my own broadband router via my own personal wi-fi. Crikey!

Summary

It’s good to see that IE9 has adopted the geolocation standard (and note that Microsoft really has adopted the standard – I didn’t have to do any browser-specific hacks to make this test work across the three browsers), but I still stand by my previous conclusion of the geolocation standard – it’s just not yet at a point where it can add much practical value.

Even though the implementations are consistent across the three browsers tested here, the results are so wildly different as to make it completely unreliable. To my surprise, Firefox (a browser I have evangelised in the past) was the worst in test – failing to deliver at all over a wireless network, and delivering a next-to-useless result over wired. Chrome delivered a scarily accurate result over wi-fi, but timed out over a wired connection. IE9 was the only browser to actually deliver a result in both cases with anything like reasonable accuracy. However, even then it necessarily is retrieving the location of my IP address in London rather than my actual location, so it’s hard to see how it could add much value at anything other than a national level (for which you may as well lookup the IP address of the client in a geocode database such as http://www.hostip.info/, for which you don’t need to ask the user’s permission)