One of the most exciting aspects of Google’s Android platform is the rapid release pace, so support for cutting-edge technologies can be included in the platform early on. As engineers, we’re excited about such features, because we like to tinker and test the limits of new technologies. But the real challenge is using these new capabilities to integrate easy-to-use features for end-users, so that everyone can be excited. The introduction of the Near-Field Communication (NFC) API in Android 2.3, accompanying the release of an NFC hardware feature in Google’s Nexus S, gave us the opportunity to do just that.

NFC is meant to send and receive small amounts of data. This data can be read from passive (non-powered) devices (e.g. credit cards or interactive posters), or active devices (e.g. payment kiosks). NFC can also be used to communicate between two NFC-equipped devices thanks to a protocol introduced by Google which defines a way for two active devices to exchange NFC messages that follow the NFC Data Exchange Format (NDEF) specification. This protocol (NDEF Push Protocol (NPP)) is implemented in Android 2.3.3 and beyond.

The NPP is very simple: it allows us to send an NDEF message to another device, which will then process the message as if it had been read from a passive tag. Thus, to understand how to use NPP, we need only to understand the NDEF message. An NDEF message is a collection of NDEF records. An NDEF record is a short header describing the contents of the record’s data payload, and then the data payload.

This new NFC/NPP capability enables the exchange of a few hundred bytes of information in a well-defined format between devices, using proximity as a method for instigation and authentication. In other words, we can now share a small amount of data between two devices without going through the normal steps of pairing or association that are required by more conventional (and higher-bandwidth) avenues like Bluetooth or WiFi—the fact that the two phones are in close proximity is enough evidence to convince the NFC software that the devices are eligible to receive information from each other.

The NFC API for Android handles all of the details of receiving and parsing NFC messages. It then decides what to do with the message by investigating the intent filters registered for applications on the device. In some cases the messages may have characteristics that can lead to finer-grained dispatching. In the case of an NDEF message, you can register for NFC messages at varying levels of detail: from as vague as any NDEF message to as detailed as an NDEF message containing a URI matching a given pattern. A pleasant result of this approach is that the operating system can have “catch-all” applications for messages that have unknown details.

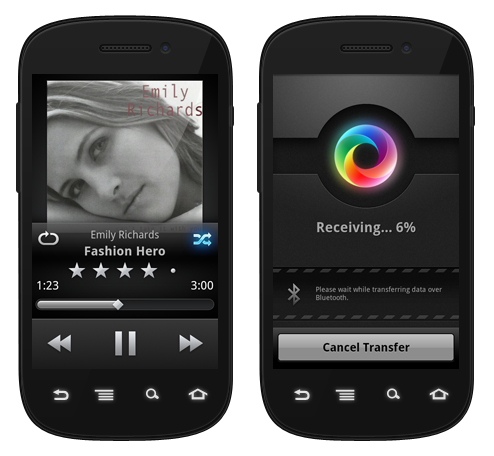

Let’s consider a possible application of the NFC technology in the context of the doubleTwist app. If you’re listening to a song and you want to share the details of the song with your friend, how can NFC help with this? Well, we can use NFC to bundle up a small bit of information about the song in a well-defined format, which the other phone can then parse and use to display information about the song. We will pass the metadata in a single NDEF Record containing a JSON object.

To ensure that our message is routed to doubleTwist on the receiving phone, we rely on Android’s ability to dispatch messages based on the data type of the payload, signaled by setting the the type name field to TNF_MIME_MEDIA. This indicates that the type field of the NDEF record should be a MIME type as specified by RFC2046. To take advantage of this capability, we create our own custom MIME type to use in the type field. Since the NDEF message must be composed of bytes, we’ll also need to convert the strings into byte arrays. The code for all of this looks like this:

[php]//Assume that musicService is an interface to our music

//playback service

JSONObject songMetadata = new JSONObject();

String title = musicService.getCurrentTrackTitle();

String artist = musicService.getCurrentTrackArtist();

String album = musicService.getCurrentTrackAlbum();

songMetadata.put(“title”, title);

songMetadata.put(“artist”, artist);

songMetadata.put(“album”, album);

String mimeType = "application/x-doubletwist-taptoshare";

byte[] mimeBytes = mimeType.getBytes(Charset.forName("UTF-8"));

String data = songMetadata.toString();

byte[] dataBytes = data.getBytes(Charset.forName("UTF-8"));

byte[] id = new byte[0]; //We don’t use the id field

r = NdefRecord(NdefRecord.TNF_MIME_MEDIA, mimeBytes, id, dataBytes);

NdefMessage m = new NdefMessage(new NdefRecord[]{r});[/php]

Similarly, our application registers an Intent filter to define the Activity that will handle NFC messages that match this mime type. So, when another phone running doubleTwist receives our message, doubleTwist will have priority in processing the message. To do this, we add an element to AndroidManifest.xml‘s entry for the activity that should launch to handle this NDEF message:

[php]<activity name="SongInfoShare">

<intent-filter>

<action android:name="android.nfc.action.NDEF_DISCOVERED" />

<data android:mimeType="application/x-doubletwist-taptoshare" />

<category android:name="android.intent.category.DEFAULT"/>

</intent-filter>

</activity>[/php]

The SongInfoShare activity will be started whenever the NFC radio receives our special message from another NFC-capable phone. In the onCreate method for this activity, we can check the action for the intent that started to activity to verify that it’s the NDEF_DISCOVERED action, and then use the normal intent helper methods to get the NFC message data from the Intent. Here’s what the code will look like for our info-sharing example:

[php]public class SongInfoShare extends Activity {

…

public void onCreate(Bundle b) {

if(android.nfc.ACTION_NDEF_DISCOVERED.equals(getIntent().getAction()) {

Parcelable[] msgs =

b.getParcelableArray(NfcAdapter.EXTRA_NDEF_MESSAGES);

if(msgs != null && msgs.length > 0) {

NdefMessage m = (NdefMessage)msgs[0];

NdefRecord[] r = m.getRecords();

JSONObject sharedInfo = null;

if(r != null && r.length > 0) {

try {

String payload = new String(r[0].getPayload());

sharedInfo = new JSONObject(payload);

} catch (JSONException e) {

Log.d(TAG, "Couldn’t get JSON: ",e);

}

}

//At this point, we can use the contents of

//sharedInfo to set up the contents of the activity.[/php]

So, now we’re able to easily pass along song information to a friend by simply bringing the NFC radios close together. No cutting, pasting, tapping, or searching for options in menus!

So, what happens if our friend isn’t running doubleTwist? Well, the stock Android NFC handler app can help us. It will display any text or link records contained in the NDEF message it receives. So, for example, we can also share a pre-formatted link that will do a Google search for the song information. To achieve this, we can modify the end of our first code example as follows:

[php]r1 = NdefRecord(NdefRecord.TNF_MIME_MEDIA, mimeBytes, id, dataBytes);

String query = UrlEncoder.encode("""+artist+"/" /""+title""", "UTF-8");

String searchLink = "http://www.google.com/?q="+query;

byte[] searchBytes = data.getBytes(Charset.forName("UTF-8"));

NdefRecord r2 = new NdefRecord(

NdefRecord.TNF_ABSOLUTE_URL, searchBytes, id, searchBytes);

NdefMessage m = new NdefMessage(new NdefRecord[]{r1, r2});[/php]

Now, in the case where the receiving user doesn’t have doubleTwist installed, they will at least be presented with a link that they can click on to begin a Google search for more information about the song.

The first version of Google’s NDEF Push Protocol API has allowed us to bring some novel enhancements to our app using one of the newest available handset technologies. We are looking forward to further development of device-to-device NFC APIs that will allow even richer inter-device communication using just a tap!