For the last 8 years we at Code Factory have been making software that helps the blind and the visually impaired access their mobile phones. We’ve created this software for several different platforms. Last year we decided it was time to start doing something for the Android platform, due to its growing popularity and variety of devices.

From our past experience, developing a screen reader for a new platform required a lot of work, hacks, and investigation. Almost none of the previous platforms we supported implemented any sort of Accessibility API that we could use. Android, we thought, would be no exception to this rule. We were very wrong.

Starting at version 1.6, the Android operating system comes with a built-in Accessibility API that makes our application a lot easier to develop. All you do is create a service, which implements the AccessibilityService interface, declare it in your manifest and voilà! The system will start sending events, such as button presses, list navigation, focus changes, etc. to your service. You then convert this information to voice using a Text-to-Speech engine, and you have a screen reader.

The Accessibility API is not yet as complete as what you can find on a desktop PC, but it’s good enough to provide the users with basic user interface navigation, and we have no doubt that, as the Android platform evolves, so will the built-in Accessibility API.

We also wanted our application to go beyond a screen reader and provide an intuitive, easy-to-use UI that allowed the blind and visually impaired access to most of the phone’s functionality, such as messaging, web browsing, contact management, and so on.

We were pleased to see that we could do this Android. The existing set of UI controls, such as buttons and lists, can be overridden in order to provide custom functionality, such as speaking the text of the control. This made it possible for us to keep the user interface of our application consistent with Android, while at the same time providing the speech feedback that our users require.

By intercepting touch events within our application and using the gesture detectors that Android provides to developers, we were also able to make the touch screen accessible to our users, so they can use gestures like swipes to move through items of lists, or double-taps to activate items.

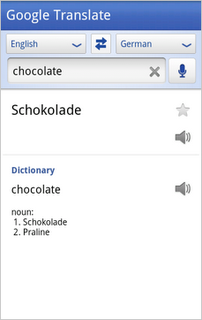

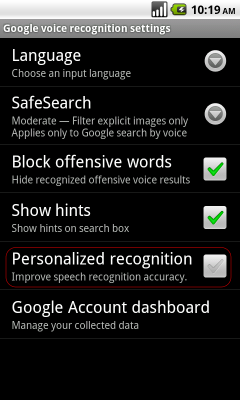

We really like how much we can accomplish with Android with so little code. Want to let a blind person create an SMS or email using voice? Simply use the SpeechRecognizer class. Want blind users who are walking on the street to know their exact location? Just use the LocationManager and Geocoder classes to give their exact street name and number.

Android lets us do a lot in a very efficient way. It wraps a whole bunch of cool technology into well-defined classes and interfaces. And if at any given time you need to know how something works behind the scenes, you just take a look at the source code, which is freely available to everyone.