Siri, or something similar, would become the norm for interfacing with smart phones and in doing so it would define the future of local search (and everything else). Well it seems to have succeeded on the everything front but just not on the local search front. The Siri natural language interface is a metaphor for interaction that will supplant the need for typing and can provide a hands free way to interact with smaller devices when typing is dangerous (ie driving) or awkward (ie all the time).

Siri, or something similar, would become the norm for interfacing with smart phones and in doing so it would define the future of local search (and everything else). Well it seems to have succeeded on the everything front but just not on the local search front. The Siri natural language interface is a metaphor for interaction that will supplant the need for typing and can provide a hands free way to interact with smaller devices when typing is dangerous (ie driving) or awkward (ie all the time).

It works incredibly well and as John Gruber noted: “I wouldn’t say I can’t live without Siri. But I can say that I don’t want to.” It is that good.

It is hands down the best way to speedily create and send text messages regardless of whether you are driving or sitting. It is the best way to get driving directions detailed on the iPhone Google Map app. It is the best way to search the web whether you want to use Google, Yahoo or Bing. In fact it even fixes what was so miserably wrong with voice search in the Google app.

Its ability to understand what you want and what you are saying is uncanny. Even with background noise. I am a convert and while I will most definitely use it while driving, it may very well become my preferred interface for many other things as well.

It truly is a harbinger of a new level of functionality for interacting with your phone (and any small device for that matter). I won’t leave home without it.

EXCEPT FOR LOCAL SEARCH.

Siri can either interact with other apps or it can answer some things directly. For example you can say “Text Aaron I will be late picking you up” at which points it interprets your instructions, performs a voice to text translation, double checks its accuracy with you, understands that you want to text and then sends the note via the iMessage app. With some data types it will just answer you inside of the Siri environment. That is the design for interaction with both Wolfram Alpha and Yelp.

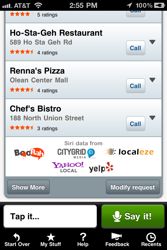

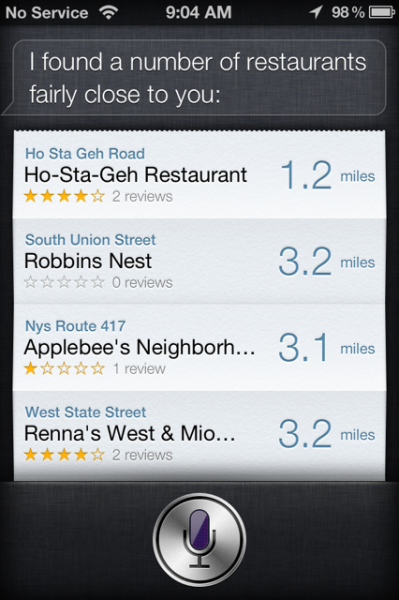

Danny Sullivan noted yesterday that when searching for local businesses, Siri accurately provides a list from Yelp but then doesn’t allow you to call the location, look at reviews or even get more details. For whatever reason, Apple and Yelp have decided to limit the functionality of the local search in such a way so that it is essentially useless, forcing a user to a different data source for the information.

Having marvelled at Siri’s capability, it is easy to imagine saying to Siri – “make a reservation at the Ho-Ste-Geh restaurant for 2″, “read me the reviews for the Rennas” or even “Add the Robins Nest’s contact details to my address book”. But the local search capability, doesn’t do any of that.

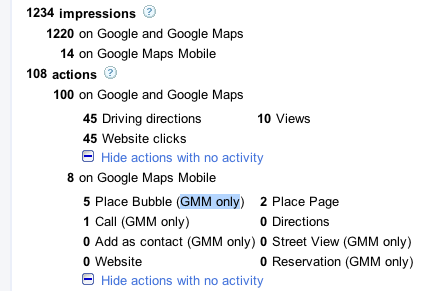

There are alternatives for a user of Siri to get local information. You just need to use the web search functionality of Google, Bing or Yahoo (use one or all three) by saying “Look on the web for a nearby restaurant” or “Google breakfast restaurants”. On the plus side, it no longer takes that 6 touches that Google voice search required to make a hands free call. Now when doing a local recovery search on Google it takes just one touch after the voice interaction to complete the call. And Siri does such a significantly better job of getting the search right the first time than Google voice search ever did. You wonder where Siri has been hiding.

But in limiting the functionality of the built in local search functionality, Apple and Yelp are missing a chance to change user behaviors. In not changing user behaviors from the gitgo they may miss the opportunity to break the habit later on. Natural language voice search on the smartphone is a long game, and the 1 million iPhones so far sold are just a drop in the bucket of the market. The real game is yet to come.

There is every reason to believe, seeing what else Siri can do, that increased local search functionality will arrive. But regardless of whether this was Yelp’s choice or Apple’s, from where I sit, this is an opportunity lost to win a battle in a long war.